AWS GenAI on AWS

GenAI services on AWS

AWS provides a comprehensive group of services that help you implement generative AI solutions.

With AWS, you can use advanced machine learning models, robust data processing capabilities, and secure infrastructure to integrate generative AI into your operations.

You can streamline processes, optimize workflows, and deliver exceptional value to your customers, all while maintaining data security and compliance.

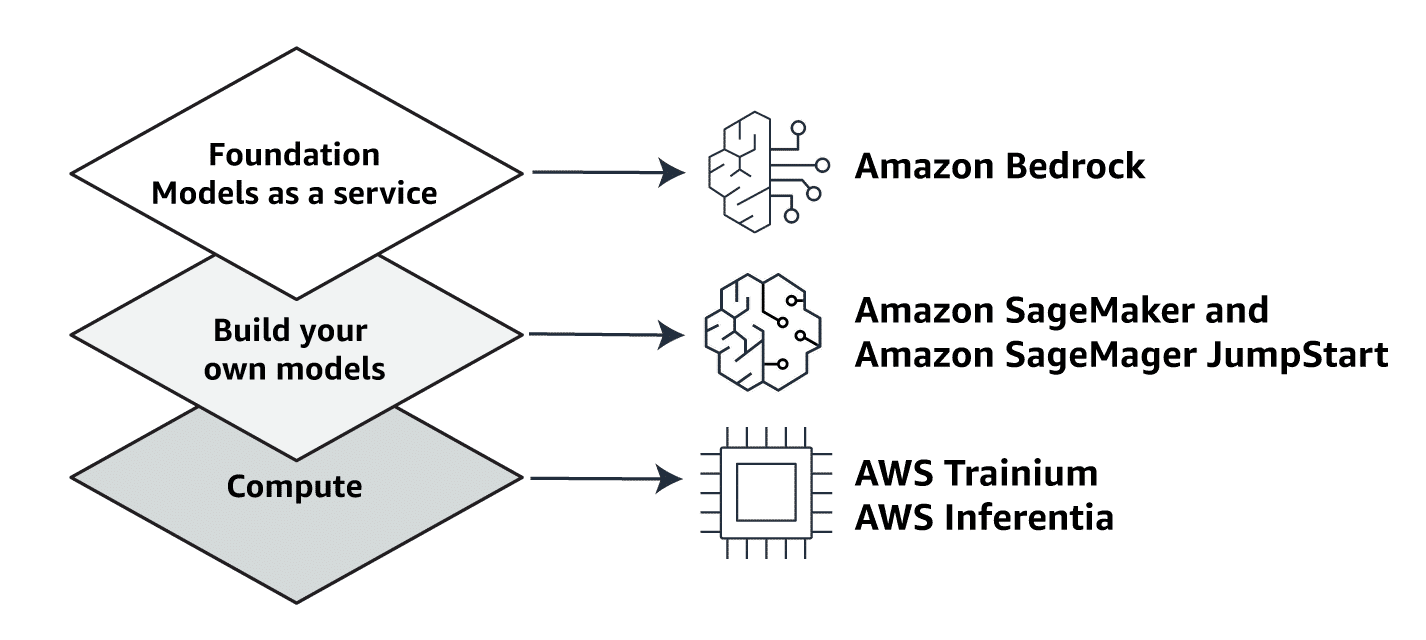

GenAI layers on AWS

Image created by Amazon Web Services.

Amazon focuses on three macro layers for generative AI: compute, build your own models, and foundation models (FMs).

Compute

First, there is the compute required to run large language models (LLMs). Amazon has two specialized chips for this purpose.

- AWS Inferentia and AWS Trainium are purpose-built ML accelerators that AWS designed from the ground up.

- The first generation of AWS Inferentia delivers significant performance and cost-savings benefits for deploying smaller models.

- AWS Trainium and AWS Inferentia2 are built for training and deploying ultra-large generative AI models with hundreds of billions of parameters.

Now that you have reviewed compute, move to the next tab to learn about building your own models.

Image created by Amazon Web Services.

Build your own model

Next, you can build your own models using Amazon SageMaker Jumpstart. Details are as follows:

- You can use Amazon SageMaker along with the purpose-built AWS Trainium chip to train your own LLMs.

- Alternatively, choose one of the language models available in SageMaker Jumpstart and retrain it with your own data.

Now that you have reviewed build your own models, move to the next tab to learn about foundation models.

Image created by Amazon Web Services.

Foundation Model

Finally, there are the models themselves. FMs with billions of parameters require time and resources to pretrain. Why build your own FM when you can take advantage of the leading FMs already on the market? Consider the following:

- Amazon Bedrock is a fully managed service that makes FMs, including Amazon Titan models, from leading AI startups and Amazon available through an API. Customers can choose from a wide range of FMs to find the model that is best suited for their use case.

Amazon Bedrock is the most efficient way to build and scale generative AI applications with FMs.

Now that you have reviewed foundation model, move on to the remaining content.

Image created by Amazon Web Services.

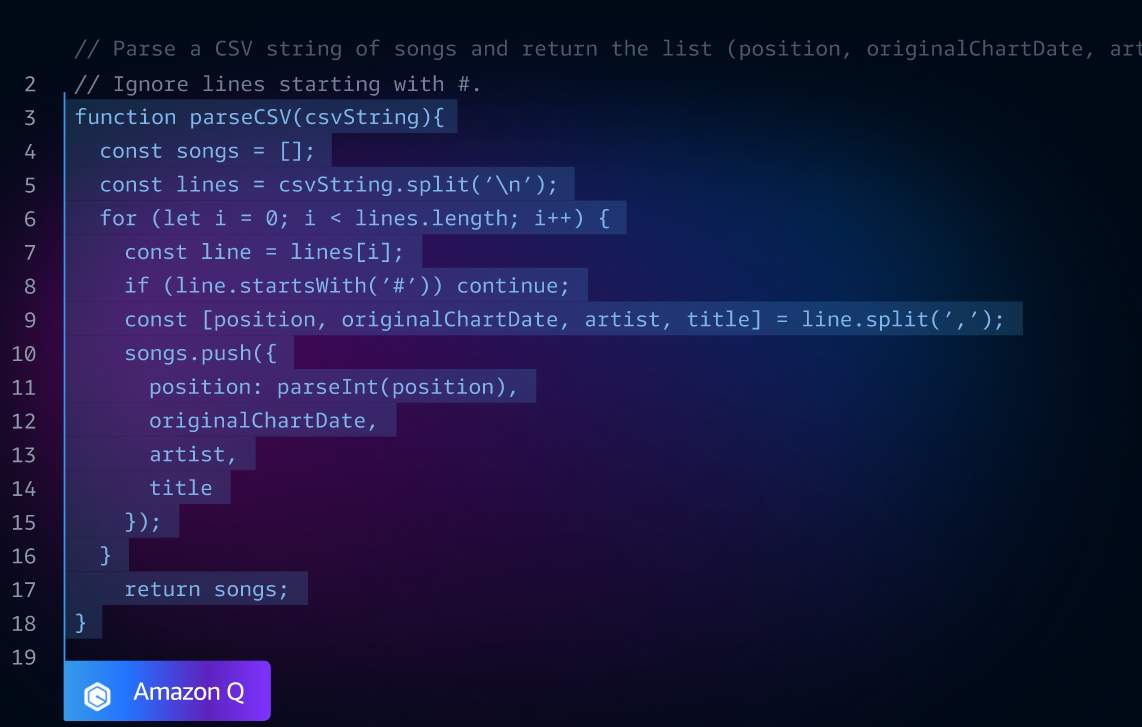

Generating code with Amazon Q Developer

You can use Amazon Q Developer as an example of generative AI that businesses are using.

Amazon Q Developer serves as the AI coding companion.

Amazon Q Developer is a generative AI tool that helps increase developer productivity by generating code.

During the Amazon Q Developer preview, Amazon ran a productivity challenge. Participants who used Amazon Q Developer were 27 percent more likely to complete tasks successfully and did so an average of 57 percent faster than those who didn't use Amazon Q Developer.

Developers are often forced to break their workflow to search the internet or to ask their colleagues for help completing a task.

Although this can help them obtain the starter code they need, it’s disruptive.

They must leave their integrated development environment (IDE) to search or ask questions in a forum or to find and ask a colleague, further adding to the disruption.

Instead, Amazon Q Developer meets developers where they are most productive, providing real-time recommendations as they write code or comments in their IDE.

Image created by Amazon Web Services.